13. Temporal networks#

In this notebook we show how to perform some basic operations on temporal graphs, reproducing the main plots appearing in the notes. Besides the classical packages, we will deploy some functions that are contained in the Tnet.py file whose content of this file is provided in the next notebook. If you want to run this notebook, create a folder named src, and add a file named Tnet.py with the codes insde of it.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import matplotlib as mpl

import matplotlib.cm as cm

from copy import copy

import networkx as nx

# upload the codes in the src folder

from src.Tnet import *

import warnings

warnings.filterwarnings("ignore")

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

/tmp/ipykernel_74460/2886845828.py in <module>

8

9 # upload the codes in the src folder

---> 10 from src.Tnet import *

11

12 import warnings

ModuleNotFoundError: No module named 'src'

# load the datasets in the tij format

tij_school = pd.read_csv('Data/SP/School_tij.csv')

tij_conference = pd.read_csv('Data/SP/Conference_tij.csv')

tij_office = pd.read_csv('Data/SP/Office_tij.csv')

tij_village = pd.read_csv('Data/SP/Village_tij.csv')

# we print the first rows of one of these datasets to provide an example

tij_school.head()

---------------------------------------------------------------------------

FileNotFoundError Traceback (most recent call last)

/tmp/ipykernel_74460/1109803879.py in <module>

1 # load the datasets in the tij format

----> 2 tij_school = pd.read_csv('Data/SP/School_tij.csv')

3 tij_conference = pd.read_csv('Data/SP/Conference_tij.csv')

4 tij_office = pd.read_csv('Data/SP/Office_tij.csv')

5 tij_village = pd.read_csv('Data/SP/Village_tij.csv')

~/anaconda3/lib/python3.9/site-packages/pandas/util/_decorators.py in wrapper(*args, **kwargs)

209 else:

210 kwargs[new_arg_name] = new_arg_value

--> 211 return func(*args, **kwargs)

212

213 return cast(F, wrapper)

~/anaconda3/lib/python3.9/site-packages/pandas/util/_decorators.py in wrapper(*args, **kwargs)

329 stacklevel=find_stack_level(),

330 )

--> 331 return func(*args, **kwargs)

332

333 # error: "Callable[[VarArg(Any), KwArg(Any)], Any]" has no

~/anaconda3/lib/python3.9/site-packages/pandas/io/parsers/readers.py in read_csv(filepath_or_buffer, sep, delimiter, header, names, index_col, usecols, squeeze, prefix, mangle_dupe_cols, dtype, engine, converters, true_values, false_values, skipinitialspace, skiprows, skipfooter, nrows, na_values, keep_default_na, na_filter, verbose, skip_blank_lines, parse_dates, infer_datetime_format, keep_date_col, date_parser, dayfirst, cache_dates, iterator, chunksize, compression, thousands, decimal, lineterminator, quotechar, quoting, doublequote, escapechar, comment, encoding, encoding_errors, dialect, error_bad_lines, warn_bad_lines, on_bad_lines, delim_whitespace, low_memory, memory_map, float_precision, storage_options)

948 kwds.update(kwds_defaults)

949

--> 950 return _read(filepath_or_buffer, kwds)

951

952

~/anaconda3/lib/python3.9/site-packages/pandas/io/parsers/readers.py in _read(filepath_or_buffer, kwds)

603

604 # Create the parser.

--> 605 parser = TextFileReader(filepath_or_buffer, **kwds)

606

607 if chunksize or iterator:

~/anaconda3/lib/python3.9/site-packages/pandas/io/parsers/readers.py in __init__(self, f, engine, **kwds)

1440

1441 self.handles: IOHandles | None = None

-> 1442 self._engine = self._make_engine(f, self.engine)

1443

1444 def close(self) -> None:

~/anaconda3/lib/python3.9/site-packages/pandas/io/parsers/readers.py in _make_engine(self, f, engine)

1733 if "b" not in mode:

1734 mode += "b"

-> 1735 self.handles = get_handle(

1736 f,

1737 mode,

~/anaconda3/lib/python3.9/site-packages/pandas/io/common.py in get_handle(path_or_buf, mode, encoding, compression, memory_map, is_text, errors, storage_options)

854 if ioargs.encoding and "b" not in ioargs.mode:

855 # Encoding

--> 856 handle = open(

857 handle,

858 ioargs.mode,

FileNotFoundError: [Errno 2] No such file or directory: 'Data/SP/School_tij.csv'

# load the datasets in the tijtau format

tijtau_school = pd.read_csv('Data/SP/School_tijtau.csv')

tijtau_conference = pd.read_csv('Data/SP/Conference_tijtau.csv')

tijtau_office = pd.read_csv('Data/SP/Office_tijtau.csv')

tijtau_village = pd.read_csv('Data/SP/Village_tijtau.csv')

# we print the first rows of one of these datasets to provide an example

tijtau_school.head()

---------------------------------------------------------------------------

FileNotFoundError Traceback (most recent call last)

/tmp/ipykernel_74460/619524950.py in <module>

1 # load the datasets in the tijtau format

2 # tijtau_school = pd.read_csv('Data/SP/School_tijtau.csv')

----> 3 tijtau_conference = pd.read_csv('Data/SP/Conference_tijtau.csv')

4 tijtau_office = pd.read_csv('Data/SP/Office_tijtau.csv')

5 tijtau_village = pd.read_csv('Data/SP/Village_tijtau.csv')

~/anaconda3/lib/python3.9/site-packages/pandas/util/_decorators.py in wrapper(*args, **kwargs)

209 else:

210 kwargs[new_arg_name] = new_arg_value

--> 211 return func(*args, **kwargs)

212

213 return cast(F, wrapper)

~/anaconda3/lib/python3.9/site-packages/pandas/util/_decorators.py in wrapper(*args, **kwargs)

329 stacklevel=find_stack_level(),

330 )

--> 331 return func(*args, **kwargs)

332

333 # error: "Callable[[VarArg(Any), KwArg(Any)], Any]" has no

~/anaconda3/lib/python3.9/site-packages/pandas/io/parsers/readers.py in read_csv(filepath_or_buffer, sep, delimiter, header, names, index_col, usecols, squeeze, prefix, mangle_dupe_cols, dtype, engine, converters, true_values, false_values, skipinitialspace, skiprows, skipfooter, nrows, na_values, keep_default_na, na_filter, verbose, skip_blank_lines, parse_dates, infer_datetime_format, keep_date_col, date_parser, dayfirst, cache_dates, iterator, chunksize, compression, thousands, decimal, lineterminator, quotechar, quoting, doublequote, escapechar, comment, encoding, encoding_errors, dialect, error_bad_lines, warn_bad_lines, on_bad_lines, delim_whitespace, low_memory, memory_map, float_precision, storage_options)

948 kwds.update(kwds_defaults)

949

--> 950 return _read(filepath_or_buffer, kwds)

951

952

~/anaconda3/lib/python3.9/site-packages/pandas/io/parsers/readers.py in _read(filepath_or_buffer, kwds)

603

604 # Create the parser.

--> 605 parser = TextFileReader(filepath_or_buffer, **kwds)

606

607 if chunksize or iterator:

~/anaconda3/lib/python3.9/site-packages/pandas/io/parsers/readers.py in __init__(self, f, engine, **kwds)

1440

1441 self.handles: IOHandles | None = None

-> 1442 self._engine = self._make_engine(f, self.engine)

1443

1444 def close(self) -> None:

~/anaconda3/lib/python3.9/site-packages/pandas/io/parsers/readers.py in _make_engine(self, f, engine)

1733 if "b" not in mode:

1734 mode += "b"

-> 1735 self.handles = get_handle(

1736 f,

1737 mode,

~/anaconda3/lib/python3.9/site-packages/pandas/io/common.py in get_handle(path_or_buf, mode, encoding, compression, memory_map, is_text, errors, storage_options)

854 if ioargs.encoding and "b" not in ioargs.mode:

855 # Encoding

--> 856 handle = open(

857 handle,

858 ioargs.mode,

FileNotFoundError: [Errno 2] No such file or directory: 'Data/SP/Conference_tijtau.csv'

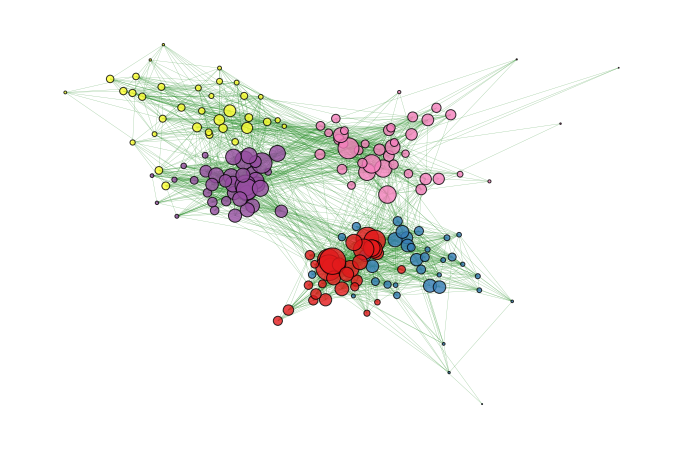

13.1. Create and plot the aggregated graph#

We consider the school dataset and create its static aggregated version. We plot it, color-coding the nodes according to their class affiliation. As shown in the cell above, the dataframe tij_school contains two additional columns (C1 and C2) that indicate the class to which i and j belong to, respectively.

# copy the tij_school in df. This is done for two reasons:

# * first, if you want to repeat the analysis on a different graph you only have to change the name once.

# * secondly, remember that when you x = y in python it is a copy by reference and not by name. Using the copy() command you avoid making modification to the original input variable.

df = copy(tij_school)

# add a weight column to the dataframe: in the tij representation all contacts have equal weight

df['w'] = 1

# aggregate the contact by the interacting indices

df = df.groupby(['i', 'j']).sum().reset_index()[['i', 'j', 'w']]

df.head()

| i | j | w | |

|---|---|---|---|

| 0 | 600 | 609 | 53 |

| 1 | 600 | 610 | 20 |

| 2 | 600 | 613 | 567 |

| 3 | 600 | 616 | 1 |

| 4 | 600 | 623 | 4 |

In the dataframe df we built, we removed the column t and substituted encoded in w the number of appearances of a single link. We now build a weighted adjacency matrix of this graph using the scipy package and then passing it to networkx to obtain the graph. To do so, we must first create a dictionary that converts the indices contained in df into integers between \(1\) and \(n\), where \(n\) is the number of nodes.

# make a list of all nodes

all_nodes = np.unique(df[['i', 'j']])

# count how many nodes there are

n = len(all_nodes)

# create the dictionary between each node id and an integer

NodeMapper = dict(zip(all_nodes, np.arange(n)))

# map the nodes id to the integer

df.i = df.i.map(lambda x: NodeMapper[x])

df.j = df.j.map(lambda x: NodeMapper[x])

df.head()

| i | j | w | |

|---|---|---|---|

| 0 | 0 | 7 | 53 |

| 1 | 0 | 8 | 20 |

| 2 | 0 | 11 | 567 |

| 3 | 0 | 14 | 1 |

| 4 | 0 | 21 | 4 |

# convert the edge list into a sparse matrix: df.i, df.j are the coordinates of the non-zero entries of W and df.w is the value

W = csr_matrix((df.w, (df.i, df.j)), shape = (n,n))

# symmetrize the matrix

W = W + W.T

# build the graph from the matrix W

G = nx.from_scipy_sparse_array(W)

Now we plot

# this return a dictionary giving x and y coordinates of each node so that nodes that are more densely connected are close to one another and it results in a better plot

pos = nx.spring_layout(G)

# we set the node size proportional to the total interaction time

node_size = 0.25*(W@np.ones(n))

edge_size = 0.3

# create the class label vector

classes = []

for node in all_nodes:

_df = tij_school[tij_school.i == node]

if len(_df) > 1:

classes.append(_df.iloc[0].C1)

else:

_df = tij_school[tij_school.j == node]

classes.append(_df.iloc[0].C2)

# plot functions

all_classes = np.unique(classes)

ClassMapper = dict(zip(all_classes, np.arange(len(all_classes))))

norm = mpl.colors.Normalize(vmin = 0, vmax = len(all_classes))

cmap = cm.Set1

m = cm.ScalarMappable(norm = norm, cmap = cmap)

colors = [m.to_rgba(ClassMapper[c]) for c in classes]

plt.figure(figsize = (12,8))

nx.draw_networkx_edges(G, pos, alpha = 0.5, width = edge_size, edge_color = "forestgreen")

nx.draw_networkx_nodes(G, pos, node_size = node_size, node_color = colors, alpha = 0.8, edgecolors = 'k')

plt.axis('off')

# uncomment to save

# plt.savefig('Figures/graphPlot.pdf', bbox_inches = 'tight', dpi = 400)

plt.show();

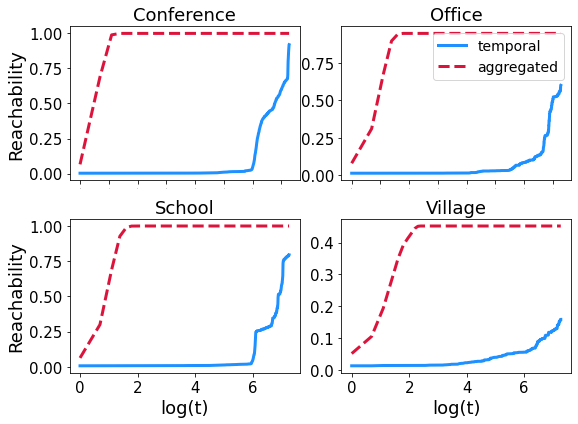

13.2. Reachability#

As we mentioned in the course, the reachability (i.e the fraction of nodes that can be reached by a walk on a graph as a function of time) is small in temporal graphs than it is on static ones. Here we consider \(4\) SocioPatterns and, for each of them, we compare the reachability over the first \(8\) hours of measurement for the temporal graph and its aggregated counterpart.

T = 1440 # 8 hours (8*3600/20)

names = ['Conference', 'Office', 'School', 'Village']

dfv = [tij_conference, tij_office, tij_school, tij_village]

R, Ragg = [], []

for counter, tij in enumerate(dfv):

print(f'Dataset: {names[counter]}')

# select iteratively one of the four graphs and limit the observations to the first 8 hours

df = copy(tij)

df = df[df.t < T]

# create a mapping between the nodes and integers

all_nodes = np.unique(df[['i', 'j']])

n = len(all_nodes)

NodeMapper = dict(zip(all_nodes, np.arange(n)))

df.i = df.i.map(lambda x: NodeMapper[x])

df.j = df.j.map(lambda x: NodeMapper[x])

# compute the reachability vector

R.append(ComputeReachabilityVector(df, T))

################################################################

# create the aggregated (unweighted) version of the graph

df_g = df.groupby(['i', 'j']).mean().reset_index()

W = csr_matrix((np.ones(len(df_g)), (df_g.i, df_g.j)), shape = (n,n))

W = W + W.T + diags(np.ones(n))

Wt = [W for t in range(T)]

# compute the reachability vector

Ragg.append(ReachabilityFromAdjacencyList(Wt))

Dataset: Conference

Dataset: Office

Dataset: School

Dataset: Village

Progress: 99%

# PLOT

fig, ax = plt.subplots(2,2, figsize = (8, 6))

tv = np.arange(T)

for counter in range(4):

a, b = int(counter/2), counter%2

ax[a,b].plot(np.log(tv+1), R[counter], color = 'dodgerblue', label = 'temporal', linewidth = 3)

ax[a,b].plot(np.log(tv+1), Ragg[counter], color = 'crimson', linestyle = '--', label = 'aggregated',

linewidth = 3)

if b == 0:

ax[a,b].set_ylabel('Reachability', fontsize = 18)

if a == 1:

ax[a,b].set_xlabel('log(t)', fontsize = 18)

ax[a, b].tick_params(axis = 'x', labelsize = 15)

else:

ax[a,b].tick_params(axis = 'x', labelsize = 0)

ax[a,b].tick_params(axis = 'y', labelsize = 15)

ax[a,b].set_title(names[counter], fontsize = 18)

ax[0,1].legend(fontsize = 14)

plt.tight_layout()

# uncomment to save

# plt.savefig('Figures/tnet_reachability.pdf', bbox_inches = 'tight', dpi = 400)

plt.show();

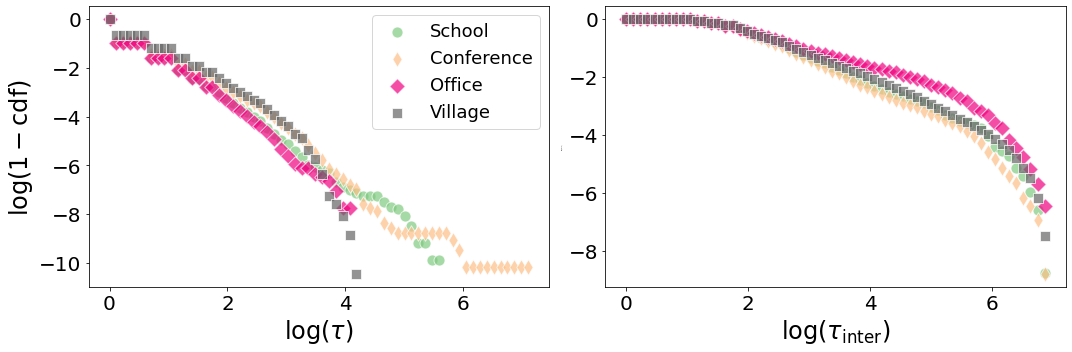

13.3. Contact duration and interevent statistics#

We now look at the distribution of the event duration and of the interevent duration.

names = ['School', 'Conference', 'Office', 'Village']

dfv = [tijtau_school, tijtau_conference, tijtau_office, tijtau_village]

intervals = []

for i, df in enumerate(dfv):

print(names[i])

intervals.append(ComputeIntereventStatistic(copy(df)))

print('\n')

School

Progress: 99%

Conference

Progress: 99%

Office

Progress: 99%

Village

Progress: 99%

# PLOT

fig, ax = plt.subplots(1, 2, figsize = (15,5))

markers = ['o', 'd', 'D', 's']

norm = mpl.colors.Normalize(vmin = 0, vmax = 3)

cmap = cm.Accent

m = cm.ScalarMappable(norm = norm, cmap = cmap)

allt = np.logspace(0, 5, 100)

for i, df in enumerate(dfv):

# compute the probability that the event duration is larger than a threshold

cdf = np.array([np.mean(df.τ >= t) for t in allt])

idx = cdf > 0

ax[0].scatter(np.log(allt[idx]), np.log(cdf[idx]), marker = markers[i],s = 120, color = m.to_rgba(i),

edgecolor = 'white', alpha = .7, label = names[i])

# keep only the interevent durations below 1000. Those that are above this number are likely associated

# to different days

x = intervals[i][intervals[i] < 1000]

cdf = np.array([np.mean(x >= t) for t in allt])

idx = cdf > 0

ax[1].scatter(np.log(allt[idx]), np.log(cdf[idx]), marker = markers[i],s = 120, color = m.to_rgba(i),

edgecolor = 'white', alpha = .7)

ax[0].tick_params(axis = 'both', which = 'major', labelsize = 20)

ax[1].tick_params(axis = 'both', which = 'major', labelsize = 20)

ax[0].legend(fontsize = 18)

ax[0].set_xlabel(r'${\rm log}(\tau)$', fontsize = 24)

ax[0].set_ylabel(r'${\rm log(1-cdf)}$', fontsize = 24)

ax[1].set_xlabel(r'${\rm log}(\tau_{\rm inter})$', fontsize = 24)

ax[1].set_ylabel(r'${\rm log(1-cdf)}$', fontsize = 0)

plt.tight_layout()

# uncomment to save

# plt.savefig('Figures/tnet_pl.pdf', bbox_inches = 'tight', dpi = 400)

plt.show();

13.4. Burstiness#

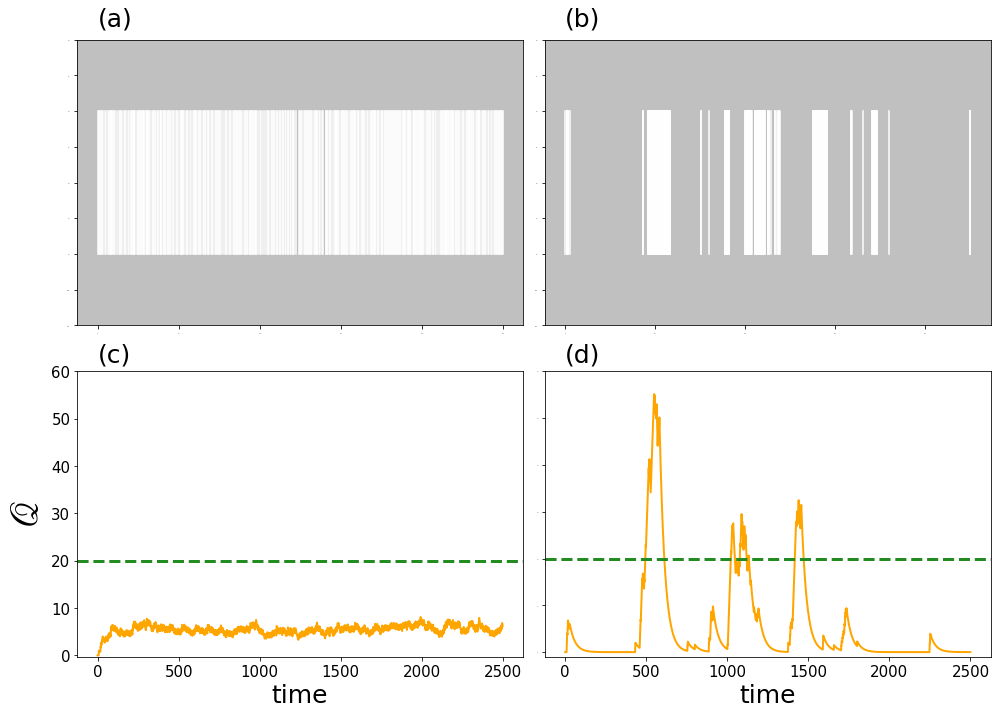

We now visualize the effect of burstiness. We take the Conference network and select one of its nodes \(i\) and look at the temporal series of its interaction times. We then show a simple modeled quantity \(\mathscr{Q}\) that increases by one for every contact \(i\) has and decreases by the \(3\%\) at each time stamp in which there is no interaction.

We compare the results with sythetic generated data in which the temporal series is obtained from a Poisson process. The results show that, even though the number of contact events is very similar, \(\mathscr{Q}\) reaches much larger values for the bursty dynamics.

# model parameters

x0 = 1

α = 0.03

T = 2500

########################################

########### POISSON DYNAMICS ###########

########################################

tv_Poi = [0]

flag = 0

# create the inter-event distribution

while flag == 0:

newt = tv_Poi[-1] + np.random.poisson(7)

tv_Poi.append(newt)

if newt > T:

flag = 1

tv_Poi = np.array(tv_Poi)

# generate the signal Q

Q_Poi = np.zeros(T)

for t in range(1, T):

if t in tv_Poi:

Q_Poi[t] = Q_Poi[t-1] + x0

else:

Q_Poi[t] = Q_Poi[t-1]*(1-α)

# compute the burstiness

inter = (tv_Poi - np.roll(tv_Poi, 1))[1:]

s = np.std(inter)/np.mean(inter)

B = (s-1)/(s+1)

print('Poissonian case')

print(f'Number of events: {len(tv_Poi)}')

print(f'Burstiness: B = {B}')

print('\n')

########################################

############## REAL DATA ###############

########################################

# select only the contacts involving node index

df = copy(tij_conference)

index = 1467

idx = np.logical_or(df.i == index, df.j == index)

df = df[idx]

# generate the signal Q

Q = np.zeros(T)

tvr = np.sort(df.t.values)

for t in range(1, T):

if t in tvr:

Q[t] = Q[t-1] + x0

else:

Q[t] = Q[t-1]*(1-α)

# compute the burstiness

inter = (df.t - np.roll(df.t, 1))[1:].values

s = np.std(inter)/np.mean(inter)

B = (s-1)/(s+1)

print('Real data')

print(f'Number of events: {len(df)}')

print(f'Burstiness: B = {B}')

Poissonian case

Number of events: 359

Burstiness: B = -0.44566561125641657

Real data

Number of events: 349

Burstiness: B = 0.6981801093753959

# PLOT

fig, ax = plt.subplots(2, 2, figsize = (14, 10))

for t in tv_Poi:

if t < T:

ax[0,0].plot([t, t], [0,1], color = 'white')

ax[0,0].set_facecolor('silver')

ax[0,0].set_ylim(-.5, 1.5)

ax[0,0].tick_params(axis = 'both', labelsize = 0)

ax[0,0].text(0, 1.6, '(a)', fontsize = 25)

######################################################

for t in df.t:

if t < T:

ax[0,1].plot([t, t], [0,1], color = 'white')

ax[0,1].set_facecolor('silver')

ax[0,1].set_ylim(-.5, 1.5)

ax[0,1].tick_params(axis = 'both', labelsize = 0)

ax[0,1].text(0, 1.6, '(b)', fontsize = 25)

#######################################################

ax[1, 0].plot(Q_Poi, linewidth = 2, color = 'orange')

ax[1,0].tick_params(axis = 'both', labelsize = 15)

ax[1,0].set_xlabel('time', fontsize = 25)

ax[1,0].set_ylabel(r'$\mathscr{Q}$', fontsize = 40)

ax[1,0].text(0, 62, '(c)', fontsize = 25)

ax[1,0].set_ylim(-0.3,60)

ax[1, 0].axhline(20, color = 'forestgreen', linewidth = 3, linestyle = '--')

# ##################################################

ax[1,1].plot(Q, linewidth = 2, color = 'orange')

ax[1,1].tick_params(axis = 'x', labelsize = 15)

ax[1,1].tick_params(axis = 'y', labelsize = 0)

ax[1,1].set_xlabel('time', fontsize = 25)

ax[1,1].text(0, 62, '(d)', fontsize = 25)

ax[1,1].set_ylim(-1,60)

ax[1,1].axhline(20, color = 'forestgreen', linewidth = 3, linestyle = '--')

plt.tight_layout()

# uncomment to save

# plt.savefig('Figures/tnet_burst.pdf', bbox_inches = 'tight', dpi = 400)

plt.show();